There seem to be a lot of sightings of “Black Swans” lately. Should we be concerned or are we wishfully thinking, caught up in media hype; or are we misinterpreting what a “Black Swan” event really is? The term “Black Swan” has become a popular buzzword for many; including, contingency planners, risk managers and consultants. However, are there really that many occurrences that qualify to meet the requirement of being termed a “Black Swan” or are we just caught up in the popularity of the moment?

There seem to be a lot of sightings of “Black Swans” lately. Should we be concerned or are we wishfully thinking, caught up in media hype; or are we misinterpreting what a “Black Swan” event really is? The term “Black Swan” has become a popular buzzword for many; including, contingency planners, risk managers and consultants. However, are there really that many occurrences that qualify to meet the requirement of being termed a “Black Swan” or are we just caught up in the popularity of the moment?

The definition of a Black Swan according to Nassim Taleb, author of the book “The Black Swan: The Impact of the Highly Improbable” is:

“A black swan is a highly improbable event with three principal characteristics: it is unpredictable; it carries a massive impact; and, after the fact, we concoct an explanation that makes it appear less random, and more predictable, than it was.”

The Problem

There is a general lack of knowledge when it comes to rare events with serious consequences. This is due to the rarity of the occurrence of such events. In his book, Taleb states that “the effect of a single observation, event or element plays a disproportionate role in decision-making creating estimation errors when projecting the severity of the consequences of the event. The depth of consequence and the breadth of consequence are underestimated resulting in surprise at the impact of the event.”

To quote again from Taleb, “The problem, simply stated (which I have had to repeat continuously) is about the degradation of knowledge when it comes to rare events (“tail events”), with serious consequences in some domains I call “Extremistan” (where these events play a large role, manifested by the disproportionate role of one single observation, event, or element, in the aggregate properties). I hold that this is a severe and consequential statistical and epistemological problem as we cannot assess the degree of knowledge that allows us to gauge the severity of the estimation errors. Alas, nobody has examined this problem in the history of thought, let alone try to start classifying decision-making and robustness under various types of ignorance and the setting of boundaries of statistical and empirical knowledge. Furthermore, to be more aggressive, while limits like those attributed to Gödel bear massive philosophical consequences, but we can’t do much about them, I believe that the limits to empirical and statistical knowledge I have shown have both practical (if not vital) importance and we can do a lot with them in terms of solutions, with the “fourth quadrant approach”, by ranking decisions based on the severity of the potential estimation error of the pair probability times consequence (Taleb, 2009; Makridakis and Taleb, 2009; Blyth, 2010, this issue).”

There was a great deal of intense media focus (crisis of the moment) on the eruption of the Icelandic volcano Eyjafjallajokull and the recent Deepwater Horizon catastrophe. Note that less attention was paid by the media to a subsequent sinking of the Aban Pearl, an offshore platform in Venezuela that occurred on 13 May 2010.

Some have classified the recent eruption of the Icelandic volcano Eyjafjallajokull and the Deepwater Horizon catastrophe as Black Swan events. If these are Black Swans, then shouldn’t we classify the Aban Pearl also a Black Swan? Or is the Aban Pearl not a Black Swan because it did not get the media attention that the Deepwater Horizon has been receiving? Please note also that Taleb’s definition of a Black Swan consists of three elements:

“it is unpredictable; it carries a massive impact; and, after the fact, we concoct an explanation that makes it appear less random.”

While the above cited events have met some of the criteria for a “Black Swan” – unpredictability; the massive impact of each is yet to be determined and we have yet to see explanations that make these events appear less random.

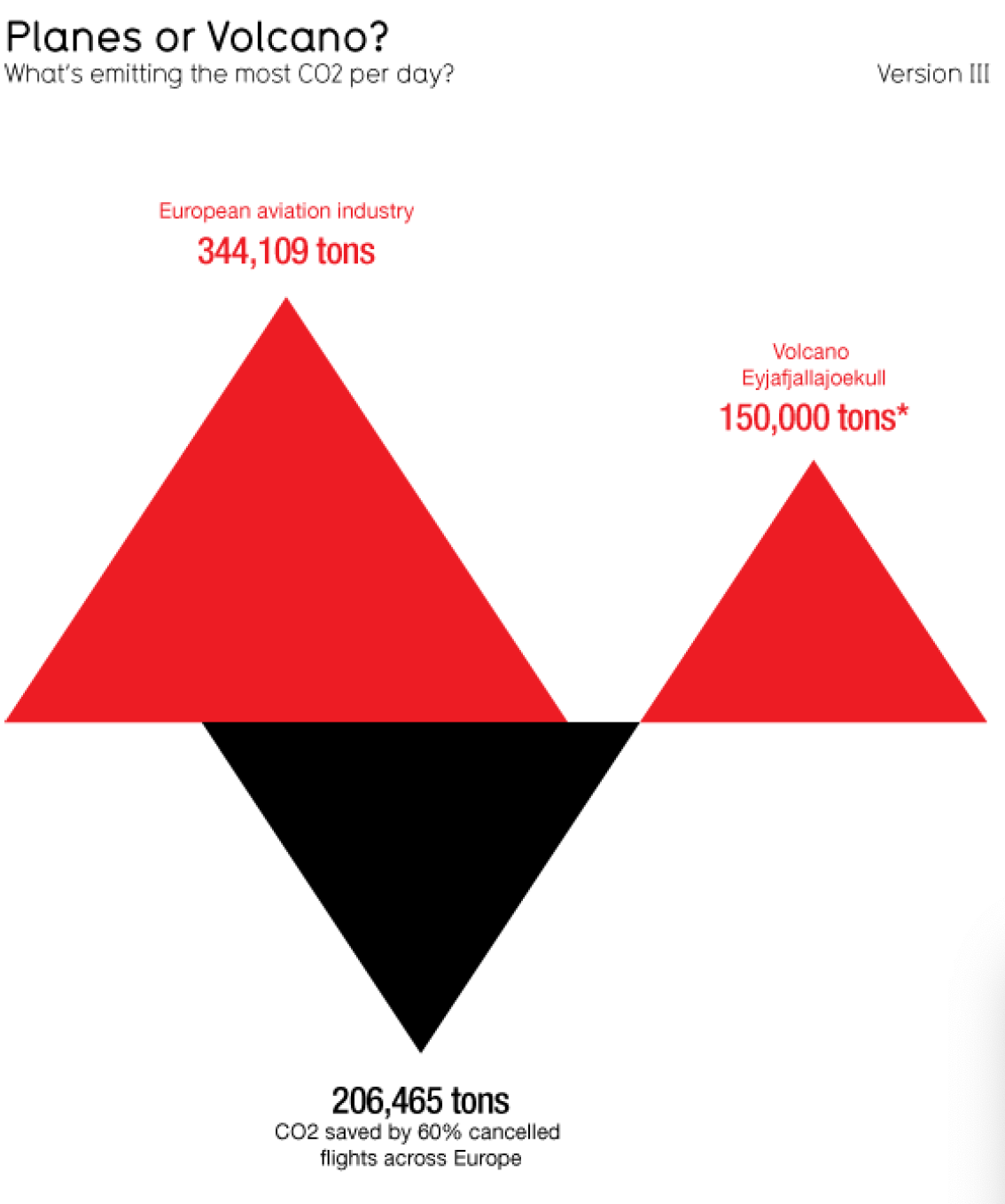

Interestingly, the Icelandic volcano Eyjafjallajokull may qualify as a “White Swan” according to Taleb in his latest version of “The Black Swan” recently published. Eyjafjallajokull on 20 April 2010 (the date of the Deepwater Horizon event) was emitting between “150,000 and 300,000″ tons of CO2 a day. This contrasted with the airline industry emissions of almost 345,000 tons, according to an article entitled, “Planes or Volcano?” originally published on 16 April 2010 and updated on 20 April 2010 (http://bit.ly/planevolcano).

Interestingly, the Icelandic volcano Eyjafjallajokull may qualify as a “White Swan” according to Taleb in his latest version of “The Black Swan” recently published. Eyjafjallajokull on 20 April 2010 (the date of the Deepwater Horizon event) was emitting between “150,000 and 300,000″ tons of CO2 a day. This contrasted with the airline industry emissions of almost 345,000 tons, according to an article entitled, “Planes or Volcano?” originally published on 16 April 2010 and updated on 20 April 2010 (http://bit.ly/planevolcano).

While we can only estimate the potentially massive impact of the Deepwater Horizon event (at the time of this writing still uncontrolled despite efforts of BP to contain the release); President Hugo Chavez announced the sinking of the Aban Pearl on Twitter, saying: “To my sorrow, I inform you that the Aban Pearl gas platform sank moments ago. The good news is that 95 workers are safe.”

Venezuela’s energy and oil minister, Rafael Ramirez, said there had been a problem with the flotation system of the semi-submersible platform, causing it to keel over and sink. Ramirez also said a tube connecting the rig to the gas field had been disconnected and safety valves activated, so there no risk of any gas leak. The incident came less than a month after an explosion that destroyed the Deepwater Horizon rig in the Gulf of Mexico. At the time of this writing oil prices are actually declining instead of rising as would be the expected outcome of a Black Swan event (perhaps we should rethink Deepwater Horizon and Aban Pearl and classify them as “White Swan” events?). What may be perceived as or classified as a Black Swan by the media driven hype that dominates the general populace may, in fact, not be a Black Swan at all for a minority of key decision makers, executives and involved parties. This poses a significant challenge for planners, strategists and CEO’s.

Challenges for Planners, Strategists and CEO’s

I would not necessarily classify the recent eruption of the Icelandic volcano Eyjafjallajokull and the Deepwater Horizon catastrophe or the Aban Pearl sinking as Black Swan events although their impact (yet to be fully determined) may be far reaching. As they are not unexpected – the volcano existed and has erupted before and offshore rigs have exploded and sunk before (i.e., Piper Alpha 6 July 1988, killing 168 and costing $1,270,000,000.00). The three events cited do have Black Swan qualities when viewed in context to today’s complex global environment. This I believe is the Strategist, Planner and CEO’s greatest challenge – to develop strategies that are flexible enough to adapt to unforeseen circumstances while meeting corporate goals and objectives. This requires a rethinking of contingency planning, competitive intelligence activities and cross-functional relationships internally and externally.

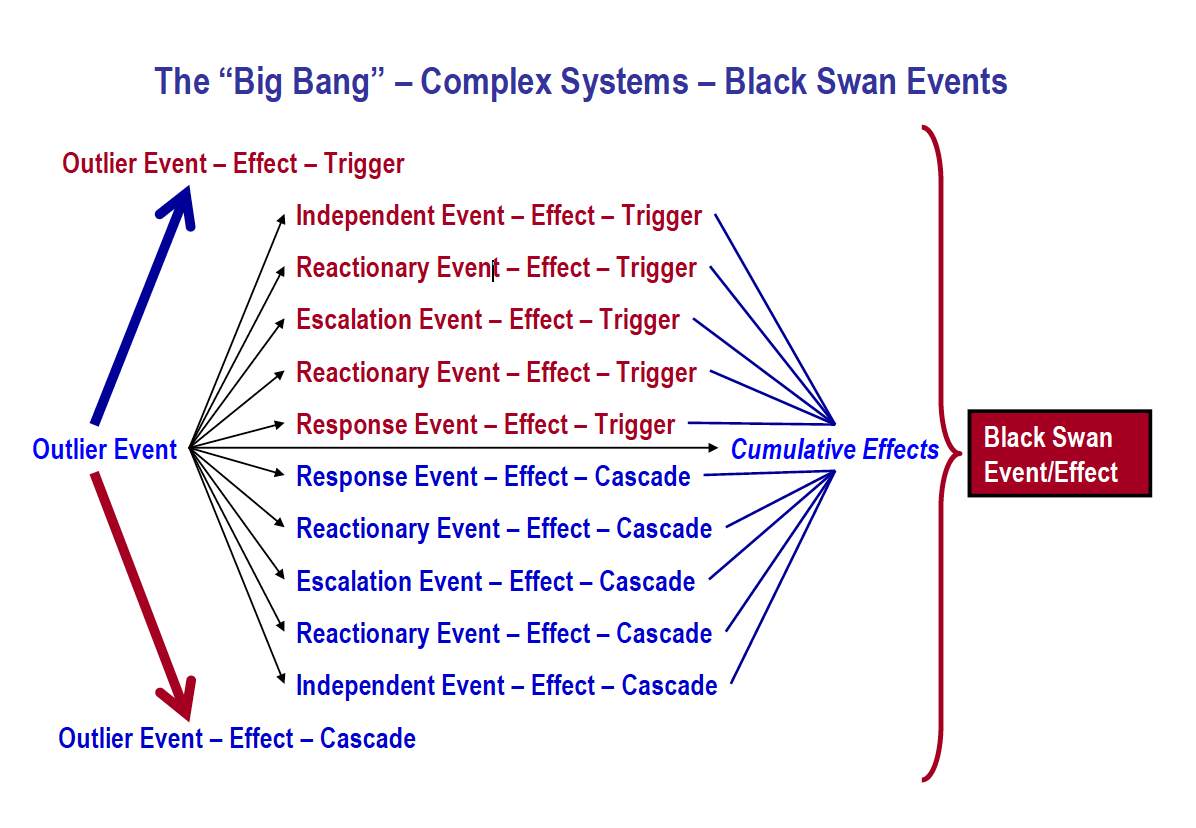

Figure 1, entitled, “The “Big Bang” – Complex Systems – Black Swan Events,” depicts the effect of an outlier event that triggers independent events and reactionary events that result in a cumulative Black Swan event/effect.

Figure 1, entitled, “The “Big Bang” – Complex Systems – Black Swan Events,” depicts the effect of an outlier event that triggers independent events and reactionary events that result in a cumulative Black Swan event/effect.

Figure 1 recognizes four elements:

❑ Agents (Outlier Events) acting in parallel

❑ Continuously changing circumstances

❑ Reactionary response creates potential cascades resulting in cumulative effects

❑ Lack of pattern recognition leads to a failure to anticipate the future

How does one overcome the cumulative effect of outlier events? We have to rethink business operations and begin to focus on what I will term “Strategy at the edge of chaos.” This should not be considered a radically new concept in management thinking; rather it recognizes that while strategic concepts are the threshold of management theory, appropriate strategic responses do not always happen fast enough. Markets are not in a static equilibrium; the recent crisis in Europe has cascaded from Greece to concerns over the banking systems in Spain, Portugal and may see Germany leave the European Union. Markets and organizations tend to be reactive, evolving and difficult to predict and control.

Complex Adaptive Systems

Unpredictability is the new normal. Rigid forecasts, cast in stone, cannot be changed without reputational damage; therefore strategists, planners and CEO’s are better served to make assumptions – an assumption can be changed, adjusted – assumptions are flexible and less damaging to an enterprise’s (or person’s) reputation. Unpredictability can be positive or negative. Never under estimate the impact of change (we live in a rapidly changing, interconnected world), inflation (this is not just monetary inflation, it includes the inflated impact of improbable events), opportunity (recognize the “White Swan” effect) and the ultimate consumer (most often overlooked in contingency plans is the effect of loss of customers).

12 Steps to get from here to there and temper the impact of Black Swans

Michael J. Kami author of the book, “Trigger Points: how to make decisions three times faster,” wrote that an increased rate of knowledge creates increased unpredictability. The Stanley Davis and Christopher Meyer authors of the book “Blur: The Speed of Change in the Connected Economy,” cite Speed – Connectivity – Intangibles as key driving forces. If we take these points in the context of the Black Swan as defined by Taleb we see that our increasingly complex systems (globalized economy, etc.) are at risk. Kami outlines 12 steps in his book that provide some useful insight. How you apply them to your enterprise can possibly lead to a greater ability to temper the impact of a Black Swan event(s).

Step 1: Where Are We? Develop an External Environment Profile

Key focal point: What are the key factors in our external environment and how much can we control them?

Step 2: Where Are We? Develop an Internal Environment Profile

Key focal point: Build detailed snapshots of your business activities as they are at present.

Step 3: Where Are We Going? Develop Assumptions about the Future External Environment

Key focal point: Catalog future influences systematically; know your key challenges and threats.

Step 4: Where Can We Go? Develop a Capabilities Profile

Key focal point: What are our strengths and needs? How are we doing in our key results and activities areas?

Step 5: Where Might We Go? Develop Future Internal Environment Assumptions

Key focal point: Build assumptions, potentials, etc. Do not build predictions or forecasts! Assess what the future business situation might look like.

Step 6: Where Do We Want to Go? Develop Objectives

Key focal point: Create a pyramid of objectives; redefine your business; set functional objectives.

Step 7: What Do We Have to Do? Develop a Gap Analysis Profile

Key focal point: What will be the effect of new external forces? What assumptions can we make about future changes to our environment?

Step 8: What Could We Do? Opportunities and Problems

Key focal point: Act to fill the gaps. Conduct an opportunity-problem feasibility analysis; risk analysis assessment; resource-requirements assessment. Build action program proposals.

Step 9: What Should We Do? Select Strategy and Program Objectives

Key focal point: Classify strategy and program objectives; make explicit commitments; adjust objectives.

Step 10: How Can We Do It? Implementation

Key focal point: Evaluate the impact of new programs.

Step 11: How Are We Doing? Control

Key focal point: Monitor external environment. Analyze fiscal and physical variances. Conduct an overall assessment.

Step 12: Change What’s not Working Revise, Control, Remain Flexible

Key focal point: Revise strategy and program objectives as needed; revise explicit commitments as needed; adjust objectives as needed.

I would add the following comments to Kami’s 12 points and Davis, Meyer point on speed, connectivity, and intangibles. Understanding the complexity of the event can facilitate the ability of the organization to adapt if it can broaden its strategic approach. Within the context of complexity, touchpoints that are not recognized create potential chaos for an enterprise and for complex systems. Positive and negative feedback systems need to be observed/acted on promptly. The biggest single threat to an enterprise will be staying with a previously successful business model too long and not being able to adapt to the fluidity of situations (i.e., Black Swans). The failure to recognize weak cause-an-effect linkages, small and isolated changes can have huge effects. Complexity (ever growing) will make the strategic challenge more urgent for strategists, planners and CEO’s.

Taleb offers the following two definitions in his book “The Black Swan,” The first is for “Mediocristan;” a domain dominated by the mediocre, with few extreme successes or failures. In Mediocristan no single observation can meaningfully affect the aggregate. In Mediocristan the present is being described and the future forecasted through heavy reliance on past historical information. There is a heavy dependence on independent probabilities.

The second is for “Extremeistan;” a domain where the total can be conceivably impacted by a single observation. In Extremeistan it is recognized that the most important events by far cannot be predicted; therefore there is less dependence on theory. Extremeistan is focused on conditional probabilities. Rare events must always be unexpected, otherwise they would not occur and they would not be rare.

When faced with the unexpected presence of the unexpected, normality believers (Mediocristanians) will tremble and exacerbate the downfall. Common sense dictates that reliance on the record of the past (history) as a tool to forecast the future is not very useful. You will never be able to capture all the variables that affect decision making. We forget that there is something new in the picture that distorts everything so much that it makes past references useless. Put simply, today we face asymmetric threats (Black Swans and White Swans) that can include the use of surprise in all its operational and strategic dimensions and the introduction of and use of products/services in ways unplanned by your organization and the markets that you serve. Asymmetric threats (not fighting fair) also include the prospect of an opponent designing a strategy that fundamentally alters the market that you operate in.

Conclusion

Taleb, in the revised 2nd edition, of “The Black Swan” posits the following: “How much more difficult is it to recreate and ice cube from a puddle than it is to forecast the shape of the puddle from the ice cube?” His point is that we confuse the two arrows: Ice cube to Puddle is not the same as Puddle to Ice cube. Ice cubes and puddles come in different sizes, shapes, etc. Thinking that we can go from theory to practice and practice to theory creates the potential for failure.

❑ Labeling

❑ Innovative, aggressive thinking is one key to surviving

❑ Recognition that theory is limited in usefulness is a key driving force

❑ Strategically nimble organizations will benefit

❑ Constantly question assumptions about what is “normal”

Ten blind spots:

#1: Not Stopping to Think

#2: What You Don’t Know Can Hurt You

#3: Not Noticing

#4: Not Seeing Yourself

#5: My Side Bias

#6: Trapped by Categories

#7: Jumping to Conclusions

#8: Fuzzy Evidence

#9: Missing Hidden Causes

#10: Missing the Big Picture

In a crisis you get one chance – your first and last. Being lucky does not mean that you are good. You may manage threats for a while. However, luck runs out eventually and panic, chaos, confusion set in; eventually leading to collapse.

❑ Loss Aversion

❑ Commitment

❑ Value Attribution

While the Icelandic volcano will have non-regulatory consequences that could as yet, be far reaching, the regulatory deluge to be expected as a result of Deepwater Horizon could be a watershed event for the offshore drilling industry, much as the Oil Pollution Act of 1990 changed many oil companies’ shipping operations.

It takes 90 million barrels of oil per day globally, as well as millions of tons of coal and billions of cubic feet of natural gas to enable modern society to operate as it does. We fail to see transparent vulnerabilities because they are all too recognizable and therefore are dismissed all too readily. In order to overcome the trap of transparent vulnerabilities we need to overcome our natural tendency toward diagnostic bias.

A diagnostic bias is created when four elements combine to create a barrier to effective decision making. Recognizing diagnostic bias before it debilitates effective decision making can make all the difference in day-to-day operations. It is essential in crisis situations to avert compounding initial errors. The four elements of diagnostic bias are:

Labeling creates blinders; it prevents you from seeing what is clearly before your face – all you see is the label. Loss aversion essentially is how far you are willing to go (continue on a course) to avoid loss. Closely linked to loss aversion, commitment is a powerful force that shapes our thinking and decision making. Commitment takes the form of rigidity and inflexibility of focus. Once we are committed to a course of action it is very difficult to recognize objective data because we tend to see what we want to see; casting aside information that conflicts with our vision of reality. First encounters, initial impressions shape the value we attribute and therefore shape our future perception. Once we attribute a certain value, it dramatically alters our perception of subsequent information even when the value attributed (assigned) is completely arbitrary.

Recognize that we are all swayed by factors that have nothing to do with logic or reason. There is a natural tendency not to see transparent vulnerabilities due to diagnostic biases. We make diagnostic errors when we narrow down our field of possibilities and zero in on a single interpretation of a situation or person. While constructs help us to quickly assess a situation and form a temporary hypothesis about how to react (initial opinion) they are restrictive in that they are based on limited time exposure, limited data and overlook transparent vulnerabilities.

The best strategy to deal with disoriented thinking is to be mindful (aware) and observe things for what they are (situational awareness) not for what they appear to be. Accept that your initial impressions could be wrong. Do not rely too heavily on preemptive judgments; they can short circuit more rational evaluations. Are we asking the right questions? When was the last time you asked, “What Variables (outliers, transparent vulnerabilities) have we Overlooked?”

My colleague, John Stagl adds the following regarding value. Value = the perception of the receiver regarding the product or service that is being posited. Value is, therefore, never absolute. Value is set by the receiver.

Some final thoughts:

❑ If your organization is content with reacting to events it may not fair well

❑ Innovative, aggressive thinking is one key to surviving

❑ Recognition that theory is limited in usefulness is a key driving force

❑ Strategically nimble organizations will benefit

❑ Constantly question assumptions about what is “normal”

Ten blind spots:

#1: Not Stopping to Think

#2: What You Don’t Know Can Hurt You

#3: Not Noticing

#4: Not Seeing Yourself

#5: My Side Bias

#6: Trapped by Categories

#7: Jumping to Conclusions

#8: Fuzzy Evidence

#9: Missing Hidden Causes

#10: Missing the Big Picture

In a crisis you get one chance – your first and last. Being lucky does not mean that you are good. You may manage threats for a while. However, luck runs out eventually and panic, chaos, confusion set in; eventually leading to collapse.

Geary Sikich Entrepreneur, consultant, author and business lecturer

Geary Sikich is a Principal with Logical Management Systems, Corp., a consulting and executive education firm with a focus on enterprise risk management and issues analysis; the firm’s web site is www.logicalmanagement.com. Geary is also engaged in the development and financing of private placement offerings in the alternative energy sector (biofuels, etc.), multi-media entertainment and advertising technology and food products. Geary developed LMSCARVERtm the “Active Analysis” framework, which directly links key value drivers to operating processes and activities. LMSCARVERtm provides a framework that enables a progressive approach to business planning, scenario planning, performance assessment and goal setting.

Geary Sikich is a Principal with Logical Management Systems, Corp., a consulting and executive education firm with a focus on enterprise risk management and issues analysis; the firm’s web site is www.logicalmanagement.com. Geary is also engaged in the development and financing of private placement offerings in the alternative energy sector (biofuels, etc.), multi-media entertainment and advertising technology and food products. Geary developed LMSCARVERtm the “Active Analysis” framework, which directly links key value drivers to operating processes and activities. LMSCARVERtm provides a framework that enables a progressive approach to business planning, scenario planning, performance assessment and goal setting.

Prior to founding Logical Management Systems, Corp. in 1985 Geary held many senior operational management positions in a variety of industry sectors. Geary served as an officer in the U.S. Army; responsible for the initial concept design and testing of the U.S. Army’s National Training Center and other related activities. Geary holds a M.Ed. in Counseling and Guidance from the University of Texas at El Paso and a B.S. in Criminology from Indiana State University. Geary is presently active in Executive Education, where he has developed and delivered courses in enterprise risk management, contingency planning, performance management and analytics. Geary is a frequent speaker on business continuity issues business performance management. He is the author of over 410 published articles and four books, his latest being “Protecting Your Business in Pandemic,” published in June 2008 (available on Amazon.com).

Geary is a frequent speaker on high profile continuity issues, having developed and validated over 4,000 plans and conducted over 450 seminars and workshops worldwide for over 100 clients in energy, chemical, transportation, government, healthcare, technology, manufacturing, heavy industry, utilities, legal & insurance, banking & finance, security services, institutions and management advisory specialty firms. Geary can be reached at (219) 922-7718.