I’ve been building custom knowledge agents (AKA chatbots) for a few weeks, and Greg asked me to share some background on how you build these tools and why. The ‘how’ is covered in a short demo video below, but before you start building nodes, it’s worth thinking about what these new chatbots are and what they can do for you.

I’ve been building custom knowledge agents (AKA chatbots) for a few weeks, and Greg asked me to share some background on how you build these tools and why. The ‘how’ is covered in a short demo video below, but before you start building nodes, it’s worth thinking about what these new chatbots are and what they can do for you.

First, we should ask what chatbots are and why they weren’t so helpful before.

What’s a Chatbot & Why They Weren’t Great

We’ve all experienced chatbots by now, whether we’re using customer support or maybe on a website’s FAQ section, and in some ways, these are text extensions of the old call trees (“press 1 for sales, 2 for customer support’) where each answer from the user sends you to a different branch of the chain. Unlike the call trees, however, the chatbots would provide appropriate answers and have built-in loops so you could ask a few questions in each session.

Unfortunately, these chatbots are challenging to get right as the branching was built manually: each question node had ‘hardwired’ answers, leading to subsequent questions. But if the developer got the questions and answer flow wrong, the user could get led in the wrong direction or stuck in a loop. You also had to anticipate the exact question and answer the person was likely to have, which limits the usefulness of these tools for more complex searches. You’ll probably have experienced this on a troubleshooting page where your exact problem isn’t shown, so there’s no way to get an answer from the session. Hopefully, the developers added a ‘contact us’ option somewhere.

So, until now, chatbots have mostly been OK: able to handle pretty narrow Q&As but unable to manage complex questions.

So what’s new?

With that in mind, you might not fancy manually wiring up a clunky set of nodes, hoping you can anticipate your user’s questions.

Luckily, you don’t have to anymore because the availability of AI tools has wholly changed how chatbots work. The quick explanation is that the LLMs (large language models) allow you to use natural language (NLP – natural language processing) to ask and answer questions. And the processors needed for this kind of work are now widely available.

So instead of hardwired Q&A paths, these new chatbots do all the work to return the response that it believes is most suitable.

Here’s an easy example.

I have a chatbot that uses my books as a knowledge base. One of the books – Beyond The Spreadsheet – explains how to conduct a risk assessment and has a section on ‘grading risks’. So if someone asks, ‘How Can I grade my risks?’, the model will identify that section as a good match to answer that question. So far, so straightforward.

But if I ask, ‘Who should lead the risk management program,’ there’s no section with that as a title. However, by ‘reading’ the text in the knowledgebase, the model identifies a section on managing the program and extracts the answer by matching the question’s meaning with the text. In this case, instead of an exact text match, NLP allows it to find the best answer based on the meaning of the question.

So I can now ask the model questions in natural language, and it will find the most appropriate answer based on the knowledge base.

But, best of all, if it’s not sure, it says, ‘I don’t know.’

Why No Answer is a Good Thing

Surely I want an answer: what use is it when I hear ‘I don’t know’?

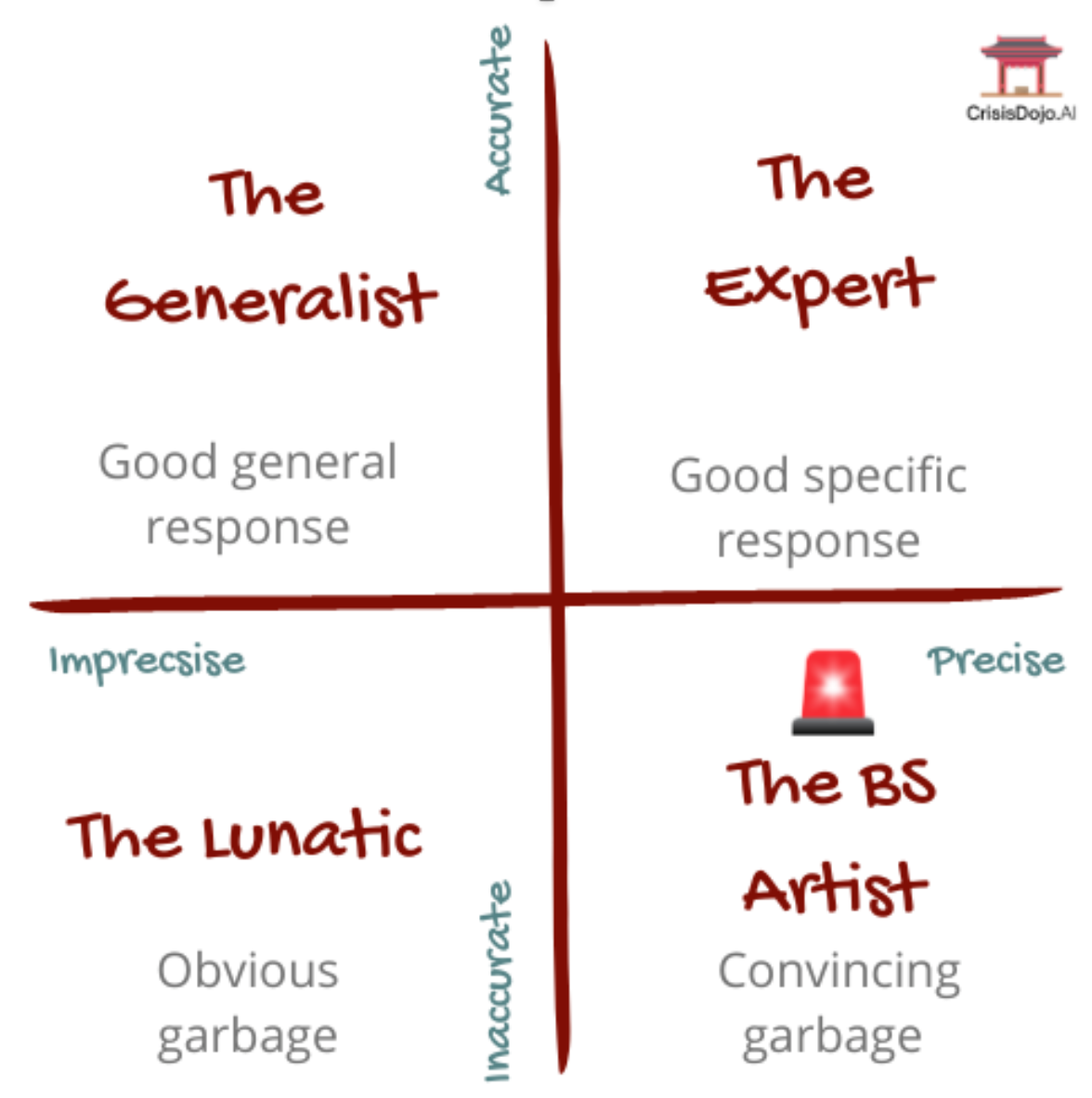

Well, if you recall, one big issue with AI is hallucinations: very plausible-sounding answers that are, in fact, garbage. Unlike the nonsense answers from the lunatic personality, it’s not clear that these made-up answers are wrong. Like a convincing human BS artist, the Ai can give you eloquent, convincing, detailed, and absolutely wrong answers.

Not what you want from a technical knowledge base.

This is why ‘I don’t know’ is a great response: instead of making something up, the chatbot tells you it can’t answer your question from its knowledge base. You don’t get a fabricated answer.

(Oh, and if you want a fallback to deal with those out-of-left-field questions, you can also program the chatbot to go to ChatGTP or some other general model to get an answer. So when my chatbot returns, ‘I don’t know’, instead of saying that to the user, it will say something like, ‘Unfortunately, I don’t have an answer to that question in my knowledgebase, but I’ll ask my cousin ChatGTP.’ The answer that comes back is labeled as from ChatGTP, so the user knows it’s not from the knowledge base. This backup isn’t necessary for a custom chatbot, but if you can see a need for both custom and general answers, that’s how you do it.)

The Killer Feature: The Custom Knowledge Base

This (positive) non-answer is possible because the other significant advancement is that these LLMs can now be pointed at a custom knowledge base. So unlike ChatGTP or Bard, the LLM only looks at the data you provide. That lets you give it a specific dataset designed for the task at hand.

In my example above, it’s my books on risk and crisis because I don’t want a general description of risk culled from 1000 web pages: I want the user to get the definition that’s consistent across my whole system.

But it could also be a set of technical papers relating to your business. Or FAQs for onboarding new users or staff.

Whatever the case, the chatbot gives specific domain- or company-specific answers: not the general answers you would get from a Google search or ChatGTP.

You could even have proprietary company data at your fingertips in a closed or on-prem system.

But before you dive in, keep this in mind. GIGO: garbage in, garbage out. The underlying data has to be clearly written and able to answer the questions you’re anticipating. Without that foundation, the bot has no chance of producing good answers.

What Can Your ChatBot Do?

So what does this mean for you?

It means that you can quickly build a custom chatbot to answer natural language questions anytime you need people to get system, company, or organization-specific information. These answers can return the exact match, a summary of more complex answers, or even a mix. So this is much closer to a conversation with a subject matter expert than the old fashion manually linked Q&A style of chatbot. Plus, you only get answers from the specific knowledge base, not a general ‘Google Search’ return or a fabricated answer from an enthusiastic but wrong AI.

Here’s One I Built Earlier

Hopefully, you’re now fired up and ready to deploy a chatbot, so how do you build one?

Here’s a demo of a chatbot structure I’ve built to give you an idea of what’s involved. I’m using a platform called Botpress which, difficulty-wise, is around the same as building a webpage from scratch (building, not fiddling with something on a pre-built site). You also need some understanding of how these tools work to navigate things. But overall, I’d say it’s a 4-ish out of 10 technically, where one is asking chatGTP a question and ten is building models from the ground up.

(Again, remember GIGO, so put aside time to prep your data too.)

But once your knowledge base is prepared and you’re ready to dive in, here’s what it looks like.

Youtube link https://youtu.be/9RgIh_cvqfc

And if you want to see one in action, I have a chatbot on my website here which answers risk and crisis questions from a knowledgebase with a ChatGTP fallback.

What’s Next?

A few months back, I was briefing an executive team on AI in risk and security and said that I thought these custom knowledgebase agents were the next big thing. That was correct but where I was wrong was the timing: I thought this was a late-2023, early 2024 thing, starting with big firms putting these in place.

However, it’s mid-2023, and we already have these custom-built knowledge agents that pretty much anyone can build.

That’s why I believe that custom knowledge agents like this will be one of the fastest and most exciting AI developments affecting how we teach, troubleshoot issues, solve difficult technical problems, onboard staff, and who knows what else.

So the answer to ‘what’s next?’ is partly down to the developer community and what they build. But it’s largely going to be down to us, the users, finding new, innovative, and valuable ways to use this technology to spread and share knowledge.

That’s enough from me: it’s time for you to go and build a bot.

Andrew Sheves Bio

Andrew Sheves is a risk, crisis, and security manager with over 25 years of experience managing risk in the commercial sector and in government. He has provided risk, security, and crisis management support worldwide to clients ranging from Fortune Five oil and gas firms, pharmaceutical majors and banks to NGOs, schools and high net worth individuals. This has allowed him to work at every stage of the risk management cycle from the field to the boardroom. During this time, Andrew has been involved in the response to a range of major incidents including offshore blowout, terrorism, civil unrest, pipeline spill, cyber attack, coup d’etat, and kidnapping.

He can be reached at: andrew@andrewsheves.com