Explainable artificial intelligence (XAI) is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms.

Explainable artificial intelligence (XAI) is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms.

Explainable AI is used to describe an AI model, its expected impact, and potential biases. It helps characterize model accuracy, fairness, transparency, and outcomes in AI-powered decision making. Explainable AI is crucial for an organization in building trust and confidence when putting AI models into production. AI explain ability also helps an organization adopt a responsible approach to AI development.

As AI becomes more advanced, humans are challenged to comprehend and retrace how the algorithm came to a result. The whole calculation process is turned into what is commonly referred to as a “black box” that is impossible to interpret. These black box models are created directly from the data. And not even the engineers or data scientists who create the algorithm can understand or explain what exactly is happening inside them or how the AI algorithm arrived at a specific result.

There are many advantages to understanding how an AI-enabled system has led to a specific output. Explain ability can help developers ensure that the system is working as expected, it might be necessary to meet regulatory standards, or it might be important in allowing those affected by a decision to challenge or change that outcome. How to build responsible AI at scale

Why explainable AI matters

It is crucial for an organization to have a full understanding of the AI decision-making processes with model monitoring and accountability of AI and not to trust them blindly. Explainable AI can help humans understand and explain machine learning (ML) algorithms, deep learning, and neural networks.

ML models are often thought of as black boxes that are impossible to interpret. ²Neural networks used in deep learning are some of the hardest for a human to understand. Bias, often based on race, gender, age or location, has been a long-standing risk in training AI models. Further, AI model performance can drift or degrade because production data differs from training data. This makes it crucial for a business to continuously monitor and manage models to promote AI explain ability while measuring the business impact of using such algorithms. Explainable AI also helps promote end user trust, model auditability and productive use of AI. It also mitigates compliance, legal, security and reputational risks of production AI.

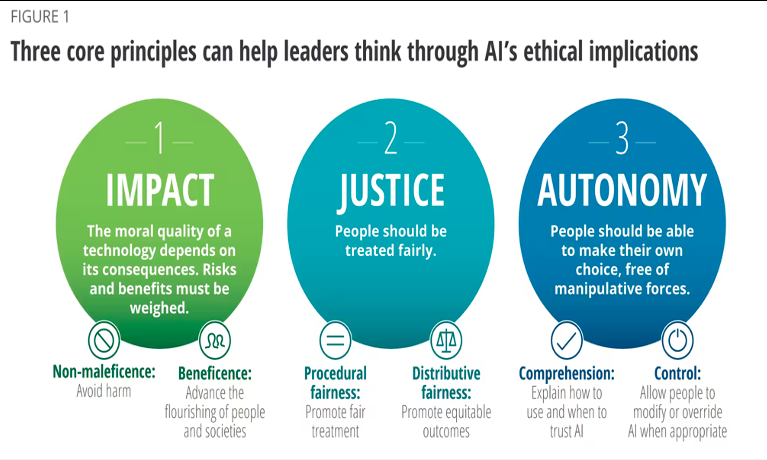

Explainable AI is one of the key requirements for implementing responsible AI, a methodology for the large-scale implementation of AI methods in real organizations with fairness, model explain ability and accountability. To help adopt AI responsibly, organizations need to embed ethical principles into AI applications and processes by building AI systems based on trust and transparency.

With explainable AI – as well as interpretable machine learning – organizations can gain access to AI technology’s underlying decision-making and are empowered to adjust. Explainable AI can improve the user experience of a product or service by helping the end user trust that the AI is making good decisions. When do AI systems give enough confidence in the decision that you can trust it, and how can the AI system correct errors that arise?

As AI becomes more advanced, ML processes still need to be understood and controlled to ensure AI model results are accurate. Let’s look at the difference between AI and XAI, the methods and techniques used to turn AI to XAI, and the difference between interpreting and explaining AI processes.

Comparing AI and XAI

What exactly is the difference between “regular” AI and explainable AI? XAI implements specific techniques and methods to ensure that each decision made during the ML process can be traced and explained.

AI, on the other hand, often arrives at a result using an ML algorithm, but the architects of the AI systems do not fully understand how the algorithm reached that result. This makes it hard to check for accuracy and leads to loss of control, accountability, and auditability.

Explainable AI techniques

The setup of XAI techniques consists of three main methods. Prediction accuracy and traceability address technology requirements while decision understanding addresses human needs. Explainable AI — especially explainable machine learning — will be essential if future war fighters are to understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.

Prediction accuracy

Accuracy is a key component of how successful the use of AI is in everyday operation. By running simulations and comparing XAI output to the results in the training data set, the prediction accuracy can be determined. The most popular technique used for this is Local Interpretable Model-Agnostic Explanations (LIME), which explains the prediction of classifiers by the ML algorithm.

Bio:

Dr. Bill Pomfret of Safety Projects International Inc who has a training platform, said, “It’s important to clarify that deskless workers aren’t after any old training. Summoning teams to a white-walled room to digest endless slides no longer cuts it. Mobile learning is quickly becoming the most accessible way to get training out to those in the field or working remotely. For training to be a successful retention and recruitment tool, it needs to be an experience learner will enjoy and be in sync with today’s digital habits.”